Learning by doing is a powerful approach, especially when tackling complex subjects like Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG). To ease into these topics without becoming overwhelmed, I’ve compiled a list of five beginner-friendly projects that build in complexity. Let’s dive into these projects:

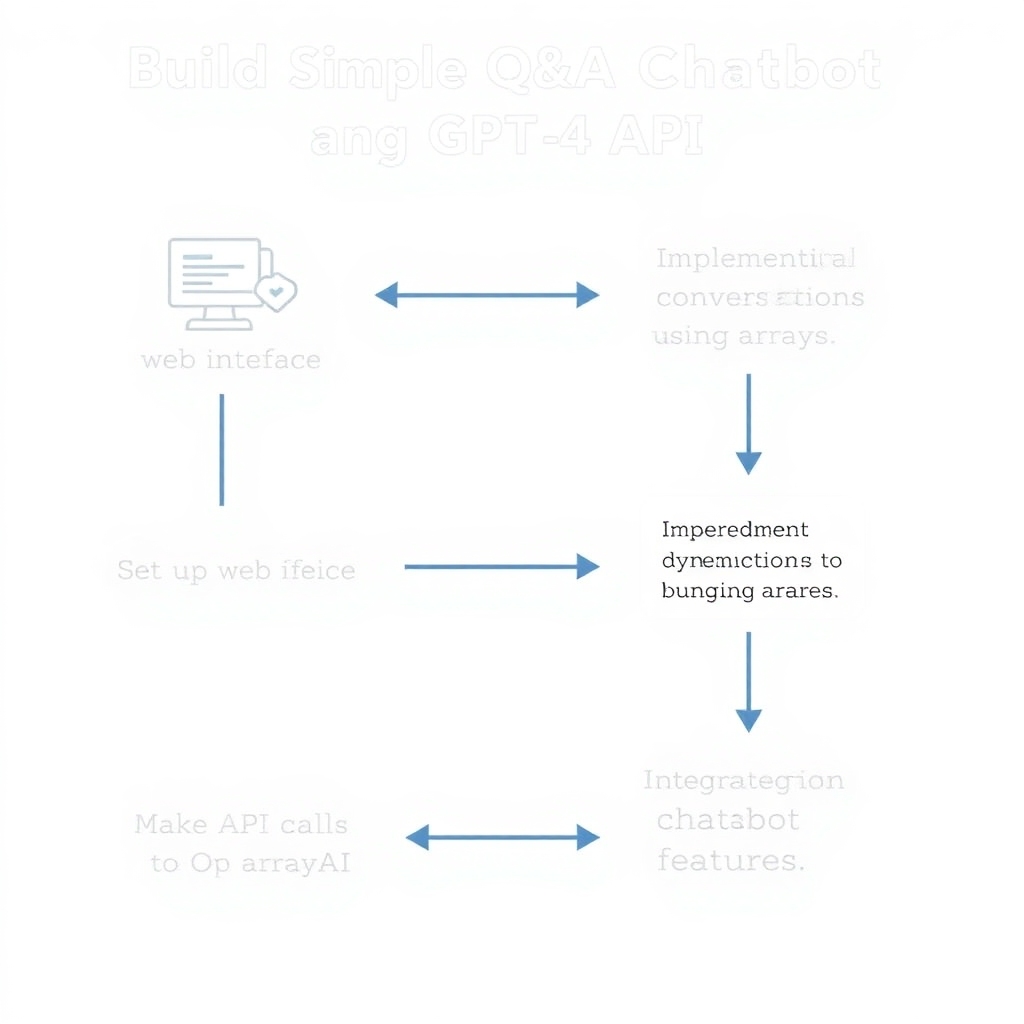

1. Building a Simple Q&A Chatbot using the GPT-4 API

Tutorial by: Tom Chant

This project guides you through creating a chatbot named “KnowItAll” with the GPT-4 API. It can answer questions, generate content, translate, and even write code. The tutorial covers everything from setting up a web interface using HTML, CSS, and JavaScript to connecting to the OpenAI API.

What You’ll Learn:

- Setting up an interactive chatbot interface.

- Managing conversation context with arrays for dynamic interactions.

- Making API calls to OpenAI and using GPT-4 models.

Here is an image of a flowchart showing the process of building a Simple Q&A Chatbot using the GPT-4 API, detailing steps like: 1. Setting up the web interface 2. Implementing dynamic conversations using arrays 3. Making API calls to OpenAI 4. Integrating chatbot features:

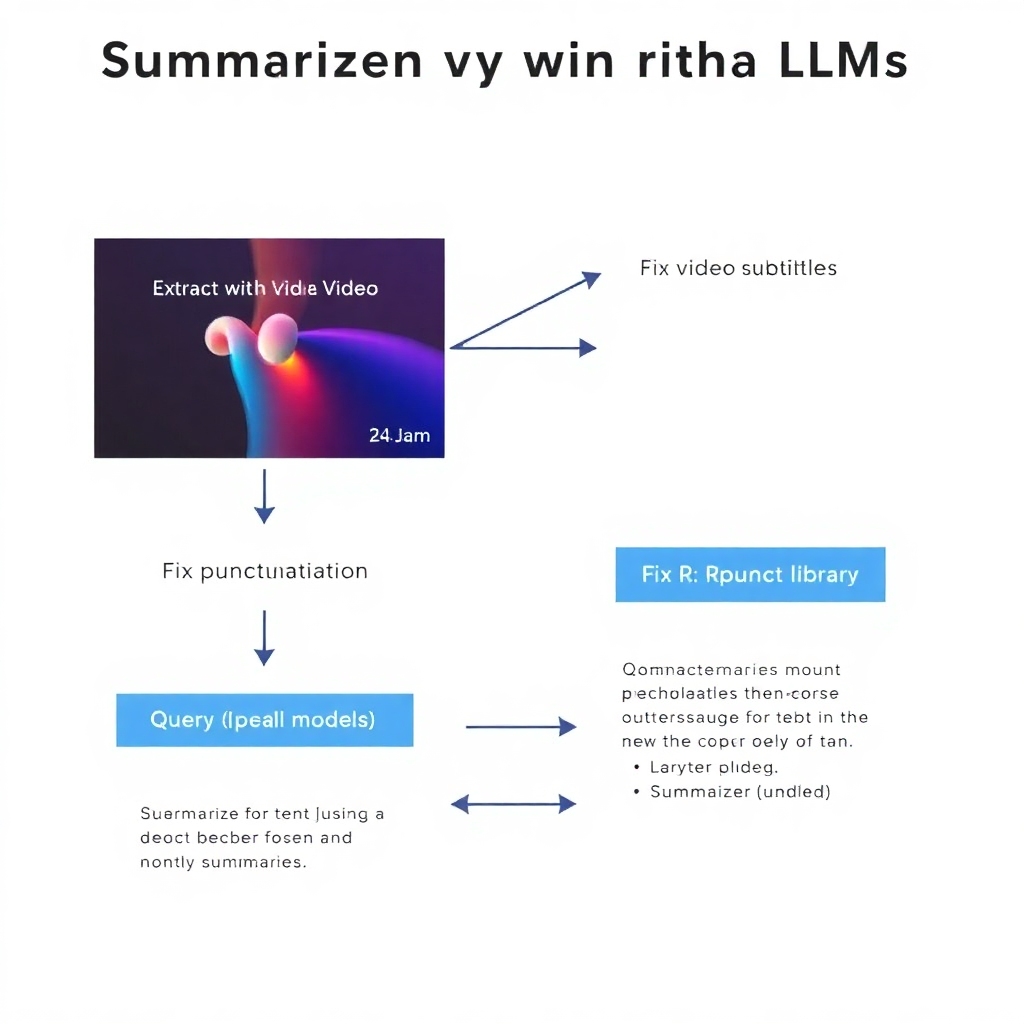

2. Summarizing a Video with LLMs

Tutorial by: Agnieszka Mikołajczyk-Bareła

Learn to summarize YouTube videos using LLMs and Automatic Speech Recognition (ASR). This tutorial walks through extracting video subtitles, fixing punctuation, and sending text to OpenAI for summarization.

What You’ll Learn:

- Using the YouTube Transcript API to extract subtitles.

- Enhancing readability by fixing ASR punctuation.

- Querying OpenAI models for summarization or content queries.

Here is an image of a diagram illustrating the process of summarizing a video with LLMs, detailing steps such as extracting video subtitles, fixing punctuation using Rpunct library, and querying OpenAI models to summarize content:

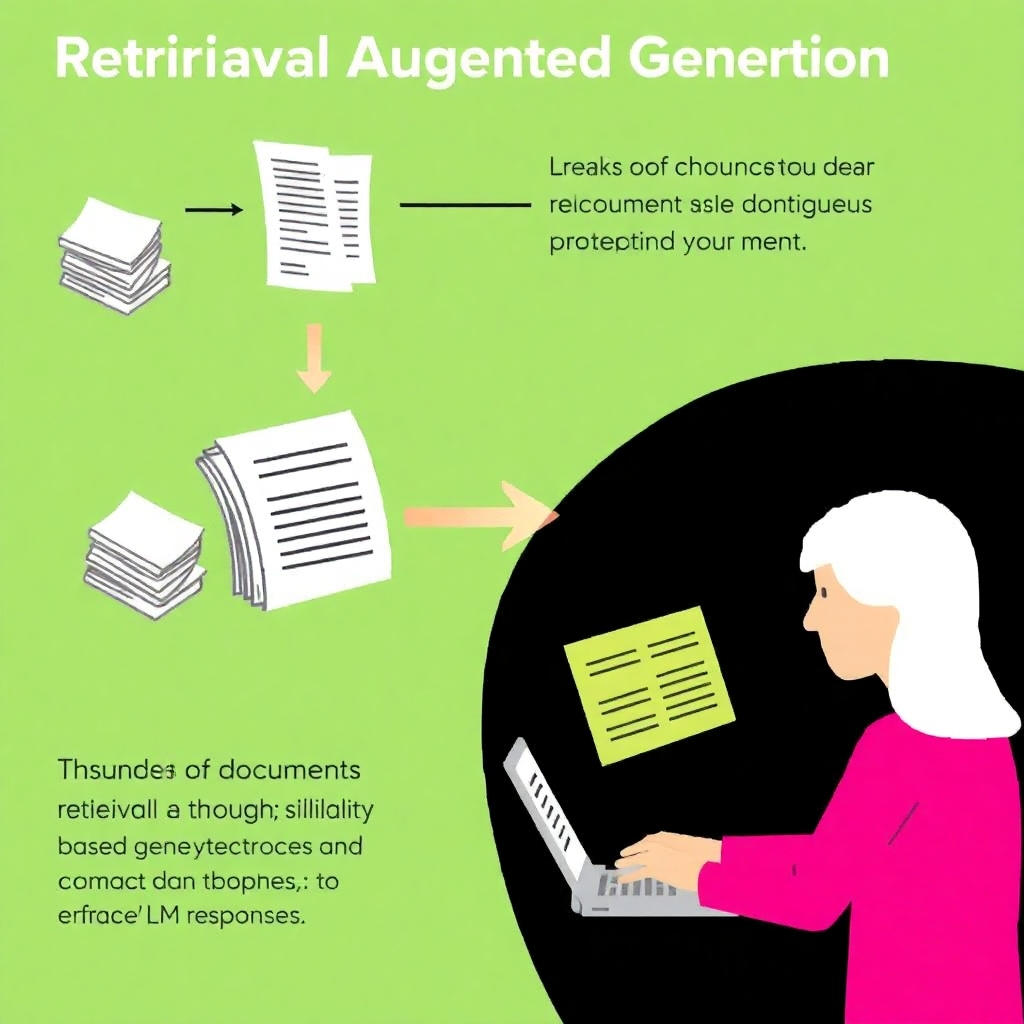

3. Building Retrieval Augmented Generation (RAG) from Scratch

Tutorial by: Mahnoor Nauyan

This project demonstrates how to build a RAG solution from scratch, focusing on document processing, chunking, and generating embeddings without relying on libraries.

What You’ll Learn:

- Understanding RAG and its usefulness for private data.

- Chunking documents for effective indexing.

- Using cosine similarity for relevant data retrieval to enhance model responses.

Here is an image of an illustration of the concept of Retrieval Augmented Generation (RAG), showing chunks of documents being processed and users retrieving relevant data through similarity-based retrieval techniques to enhance LLM responses:

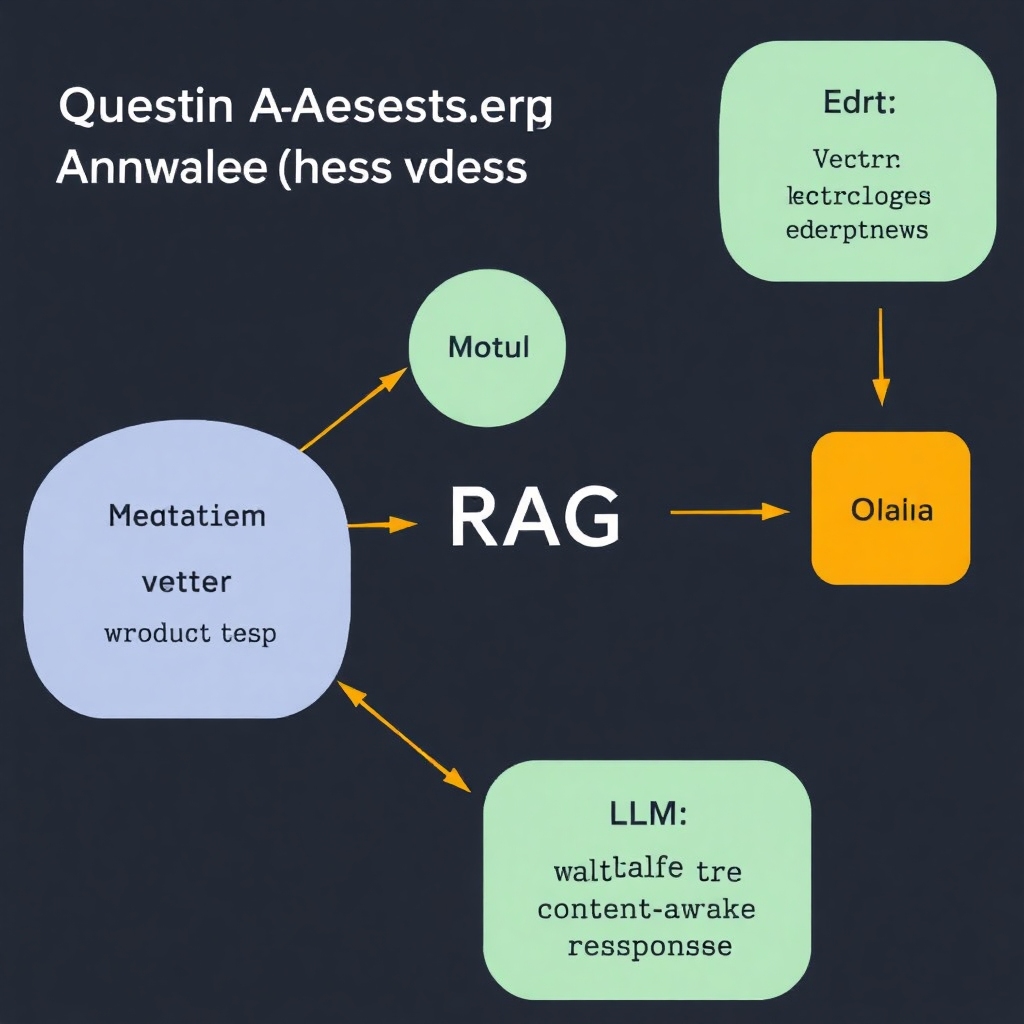

4. Building Your Own Question Answering System Using RAG

Tutorial by: Abhirami VS

Enhance LLM accuracy using RAG by incorporating external data to reduce hallucination and bias. This utilizes tools like Mistral-7B for responses and Chroma for data retrieval.

What You’ll Learn:

- Setting up a RAG pipeline with external data sources.

- Employing vector stores like Chroma for data retrieval.

- Utilizing open-source LLMs like Mistral-7B for generating accurate questions.

Here is an image of a visual of the components of a Question Answering System using RAG, showing the interaction between external knowledge sources, vector stores, and an LLM to provide context-aware responses:

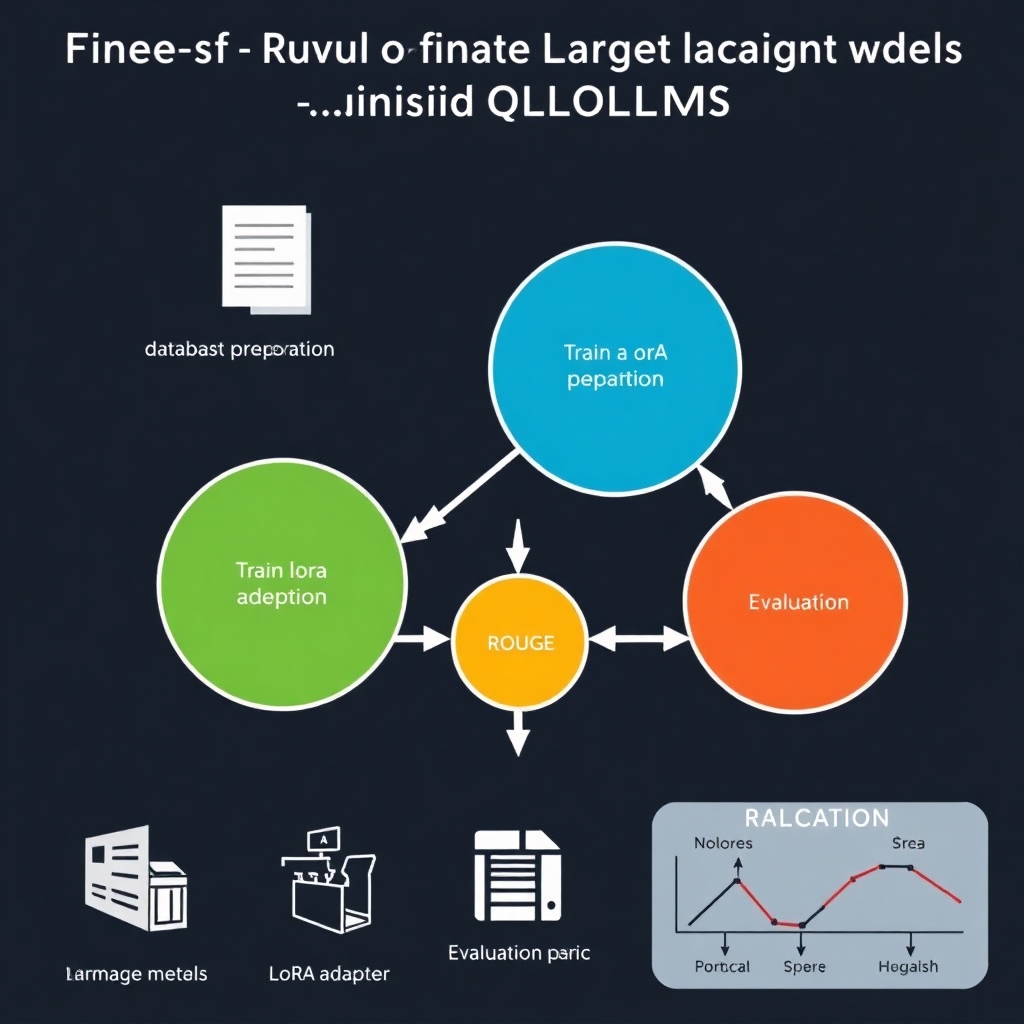

5. Fine-Tuning Large Language Models (LLMs) with QLoRA

Tutorial by: Sumit Das

This final tutorial focuses on fine-tuning pre-trained LLMs using QLoRA, allowing for efficient customization with minimal resources.

What You’ll Learn:

- Fine-tuning LLMs with QLoRA on custom datasets.

- Utilizing HuggingFace libraries for training and evaluation.

- Evaluating your models with metrics like ROUGE.

Here is an image of an infographic depicting the fine-tuning process of Large Language Models (LLMs) using QLoRA, including steps like dataset preparation, training a LoRA adapter, and evaluation metrics:

By completing these projects in order, you’ll build confidence in working with LLMs and RAG, setting a solid foundation for more advanced AI challenges ahead.