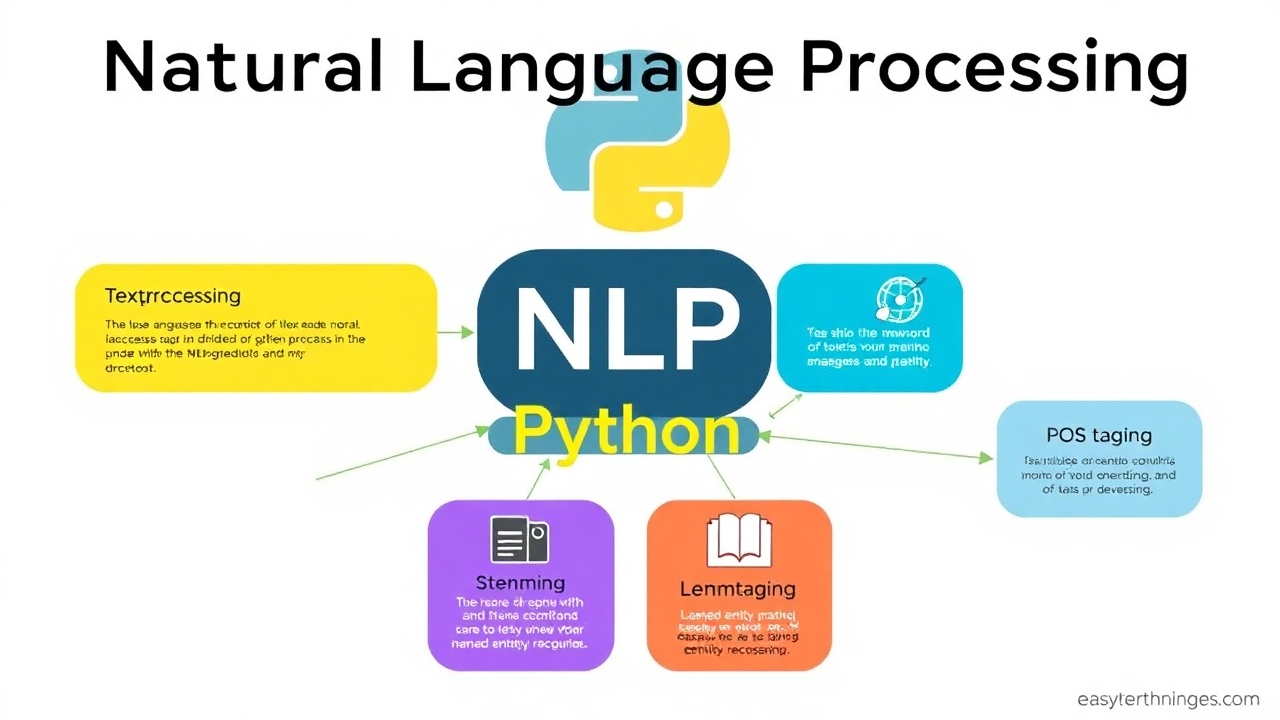

Introduction to Natural Language Processing (NLP)

Natural Language Processing (NLP) is an essential skill for developers looking to enhance their toolkit. Whether you’re new to the field or want to build applications powered by large language models (LLMs), this guide serves as an entry point. By dedicating a few weeks and following a code-first approach using Python’s NLTK (Natural Language Toolkit), you can master the fundamentals of NLP.

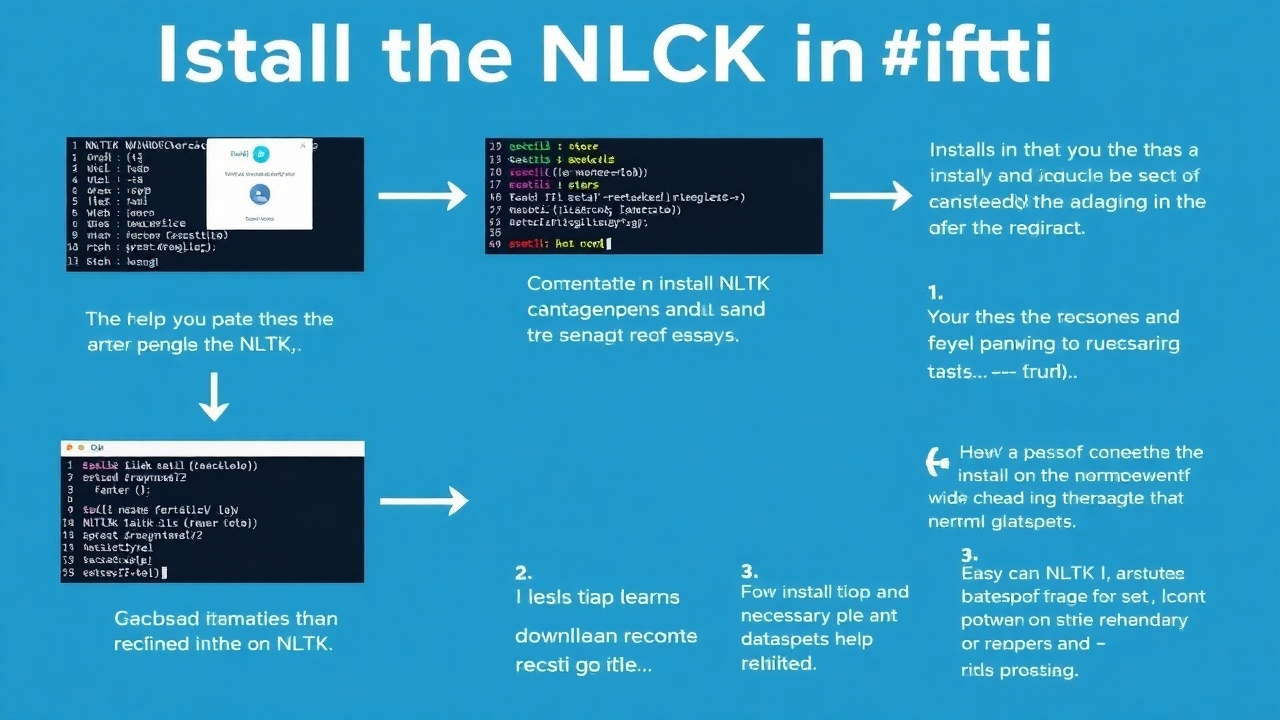

Setting Up NLTK

Before performing NLP tasks, it’s vital to set up the NLTK library. NLTK is equipped with a variety of text processing tools, including tokenizers, lemmatizers, part-of-speech (POS) taggers, and preloaded datasets. It’s your go-to toolbox for various NLP applications.

- Install the NLTK Library

To install NLTK, run the following command in your terminal:

pip install nltk- Download Resources

After installation, download essential datasets and models:

import nltk

nltk.download('punkt') # For tokenization

nltk.download('stopwords') # Stop words

nltk.download('wordnet') # Lexicon for lemmatization

nltk.download('averaged_perceptron_tagger_eng') # POS tagging

nltk.download('maxent_ne_chunker_tab') # Named Entity Recognition

nltk.download('words') # Word corpus for NERText Preprocessing

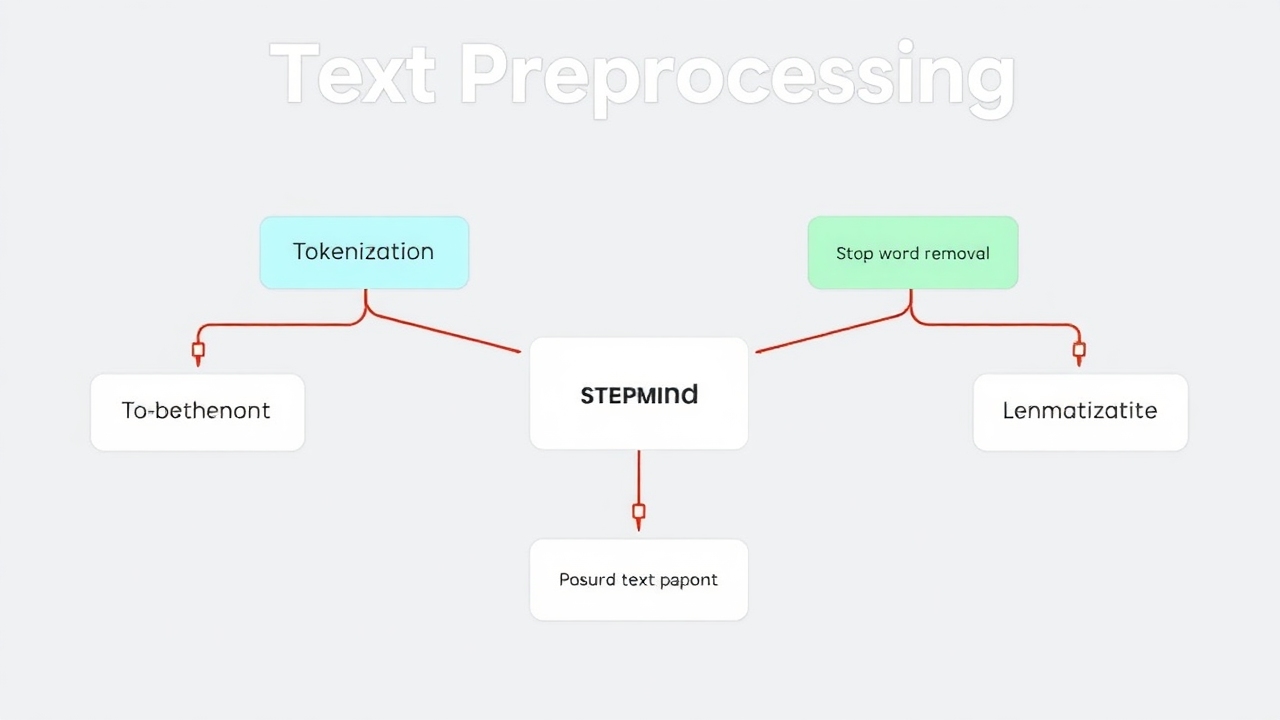

Text preprocessing is crucial in NLP for converting raw text into a structured format. We will cover key preprocessing steps: tokenization, stop word removal, and stemming.

- Tokenization

Tokenization breaks text into smaller units called tokens (words, sentences, or sub-words). Below is the process:

from nltk.tokenize import word_tokenize, sent_tokenize

import string

text = "Natural Language Processing (NLP) is cool! Let's explore it."

cleaned_text = ''.join(char for char in text if char not in string.punctuation)

# Tokenize sentences and words

sentences = sent_tokenize(cleaned_text)

words = word_tokenize(cleaned_text)- Stopwords Removal

Stopwords are common words with little meaning. Removing them helps in focusing on significant words:

from nltk.corpus import stopwords

stop_words = set(stopwords.words('english'))

filtered_words = [word for word in words if word.lower() not in stop_words]- Stemming

Stemming reduces words to their root form. For example, “running” to “run.” Using the Porter Stemmer:

from nltk.stem import PorterStemmer

stemmer = PorterStemmer()

stemmed_words = [stemmer.stem(word) for word in filtered_words]Lemmatization

Lemmatization differs from stemming by returning valid dictionary words, considering the context. Here’s how to do it using the WordNetLemmatizer:

from nltk.stem import WordNetLemmatizer

lemmatizer = WordNetLemmatizer()

lemmatized_words = [lemmatizer.lemmatize(word, pos='v') for word in filtered_words]Part-of-Speech Tagging

POS tagging identifies the grammatical category of words, aiding in understanding sentence structure:

from nltk import pos_tag

tagged_words = pos_tag(words)Named Entity Recognition (NER)

NER identifies and classifies entities like names, organizations, and locations in text:

from nltk import ne_chunk

named_entities = ne_chunk(tagged_words)Conclusion & Next Steps

In this guide, we explored the fundamentals of NLP using NLTK. Moving forward, consider:

- Working on text classification or sentiment analysis.

- Exploring other NLP libraries like spaCy or Hugging Face’s Transformers.

Images for Explanation

- Visual Representation of NLP Basics:

- Step-by-Step Installation of NLTK:

- Text Preprocessing Steps:

These images complement the text and should clarify the concepts discussed in the article.