A little over a year ago, Alec Radford introduced the concept of Deep Convolutional Generative Adversarial Networks (DCGANs), revolutionizing the approach to creating generative models in machine learning. These innovative systems can produce original, photo-realistic images through a framework of two competing deep neural networks.

The allure of generative models lies in their potential to learn from vast amounts of raw data, paving the way for AI systems that can autonomously construct understanding from unstructured information. But let’s dive into a more playful application: crafting 8-bit artwork for retro video games!

The Aim of Generative Models

Why do researchers invest time in designing models that produce imperfect images? The idea is simple: if a system can generate visual representations, it must have an understanding of the subject. For instance, consider a dog. To a human, it’s easily recognizable as a furry creature with distinct features; to a computer, it’s just an array of pixel colors.

Imagine training a computer on thousands of dog photos, ultimately enabling it to create novel dog images, perhaps even depicting specific breeds or angles. This indicates that the machine understands what a ‘dog’ comprises—legs, tails, ears—without direct instruction.

This underpins the excitement surrounding generative models; they enable computers to grasp concepts beyond direct labeling, unlike existing training methods that rely on extensive human supervision.

If we can teach machines about simple concepts like dogs, the possibilities extend to generating limitless stock photos, artwork, and even entertainment content—who knows what the future holds?

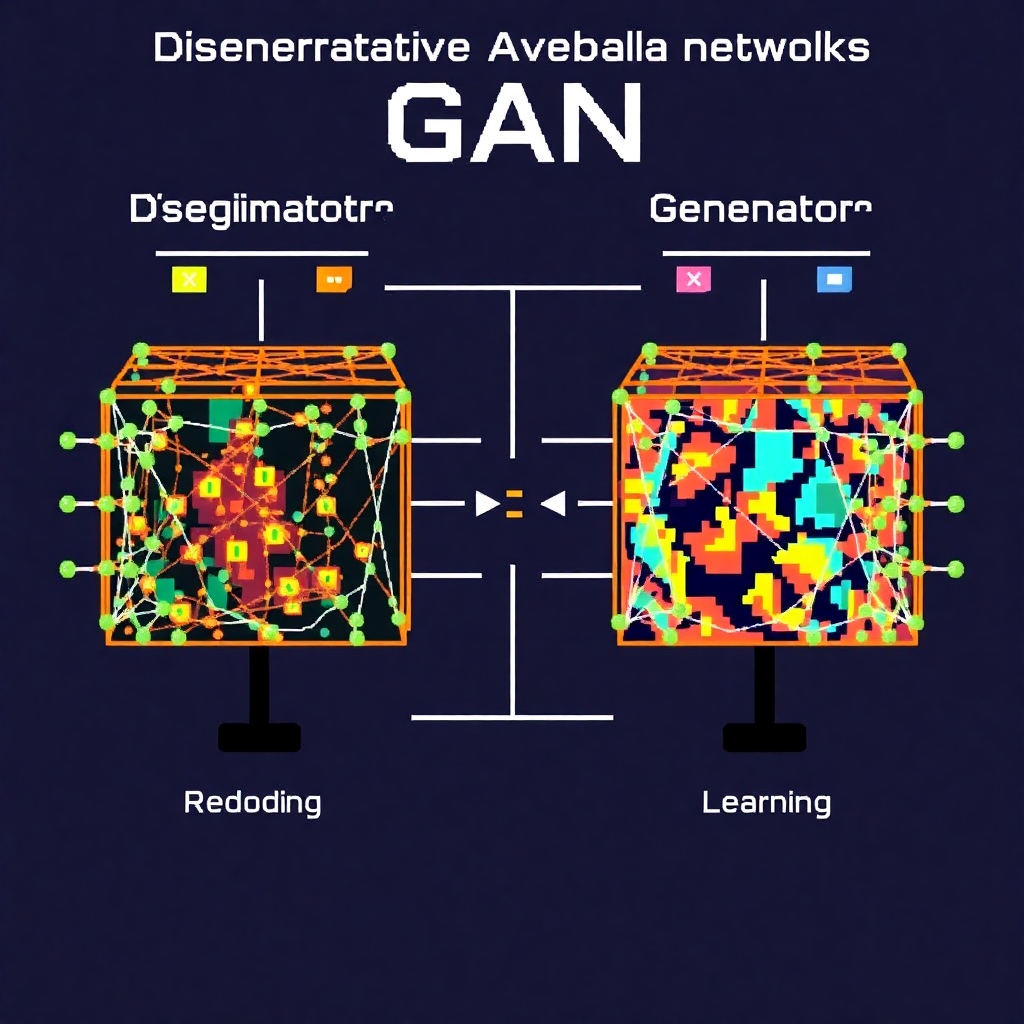

How DCGANs Operate

To set up a DCGAN, two deep neural networks are designed to compete against each other. The Discriminator acts as a judge, determining the authenticity of images. Conversely, the Generator creates new images attempting to mimic real ones.

In their initial rounds, the Generator might produce poor replicas, while the Discriminator—just as inexperienced—might misidentify these fakes as genuine. As they cycle through iterations, each network improves, leading to the Generator producing increasingly convincing images and the Discriminator honing its detection skills.

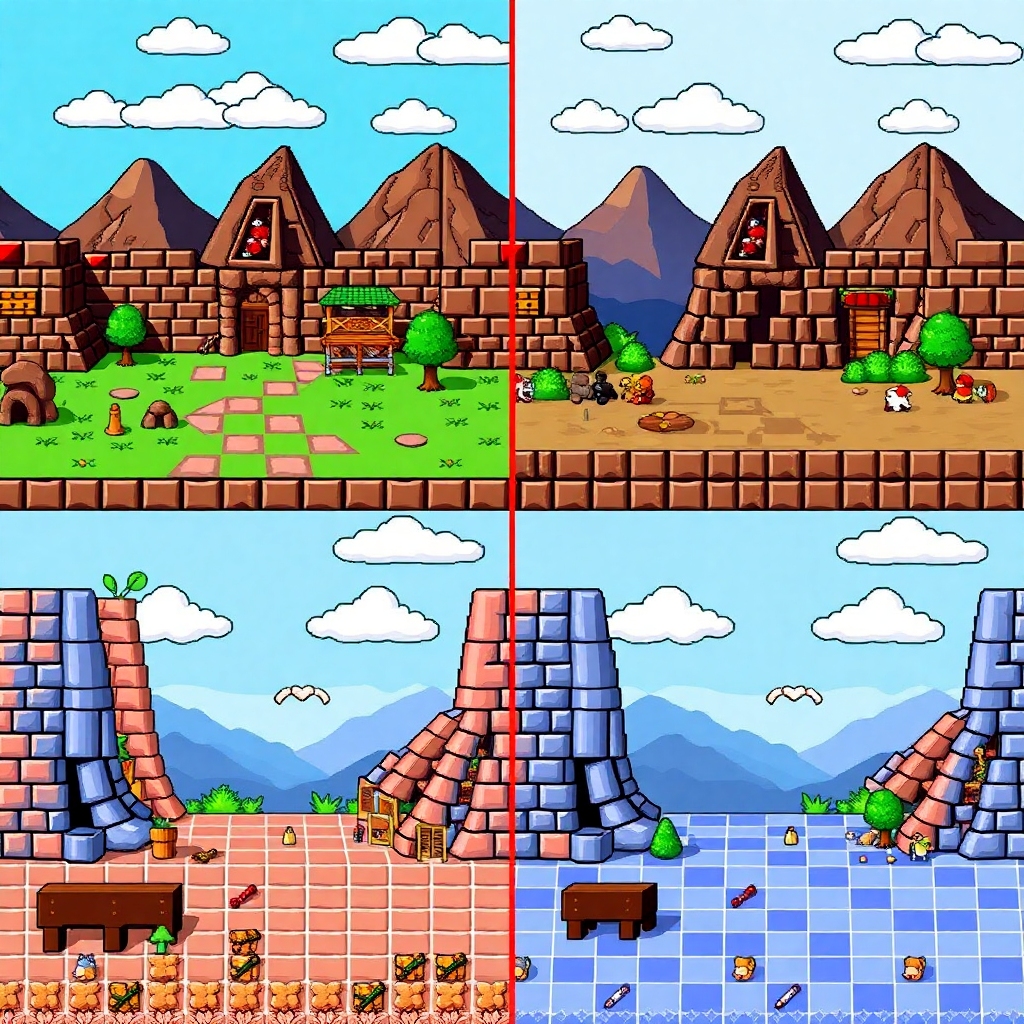

Applying DCGANs to Video Game Art

Harnessing the power of DCGANs, we aim to generate unique artwork for classic 1980s-style video games, particularly for the NES platform. We draw from a vast dataset of over 10,000 screenshots from NES games to produce convincing game sprites and tiles.

The ultimate goal is not perfect graphics, but functional 16×16 tiles that replicate the essence of retro games. After processing the generated images to fit within the NES’s 64 color constraints, the result is a set of unique pixel art tiles that reflect the nostalgic aesthetics of the era.

Conclusion

Generative models like DCGANs hold immense potential for creativity, merging art and technology in exciting ways. As researchers continue to push boundaries, the dream of generating endless artwork could soon shift from theoretical to practical reality.

Generated Images

Here are some images to illustrate the concepts discussed:

- An artistic representation of Generative Adversarial Networks (GANs) showing the competitive nature of the Discriminator and Generator:

- A colorful and imaginative 8-bit pixel art scenery, reflective of nostalgic video game settings:

- A side-by-side comparison of original NES game art with machine-generated 8-bit style art, showcasing the distinct styles:

- An abstract representation of the concept of machine learning, illustrating how an AI learns to create art:

Feel free to explore these concepts further or ask for more information!