We all know and love Google Translate, the website that seems to magically translate between 100 different languages instantly. Available on our phones and smartwatches, this tool has transformed global communication by breaking down language barriers.

However, while many students have used Google Translate as a tool for their homework over the years, significant advancements in the technology underpinning this service have taken place recently. Deep learning has reshaped our approach to machine translation, leading to breakthroughs where researchers without extensive language experience can develop sophisticated translation systems that outperform those crafted by experts.

The Evolution of Machine Translation

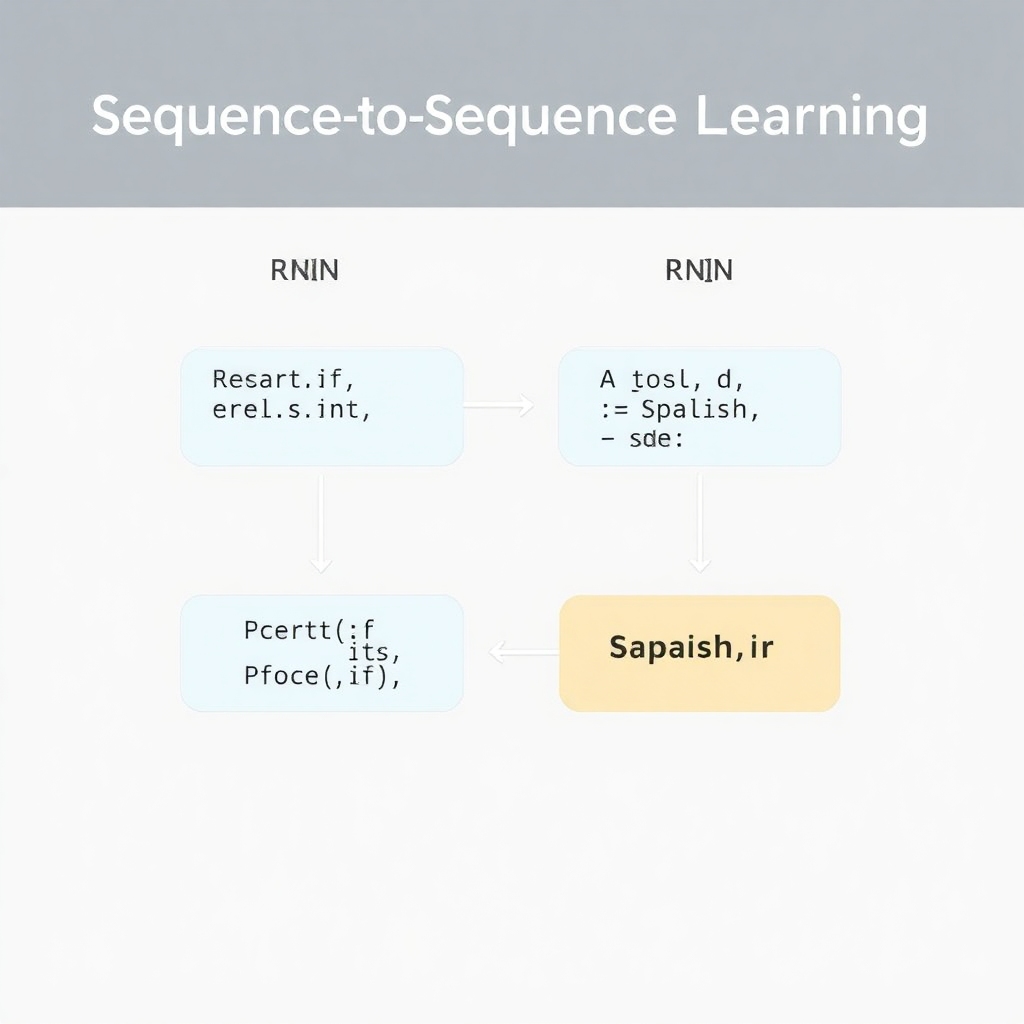

The technology driving this transformation is known as sequence-to-sequence learning—a powerful technique that has applications beyond translation, including AI chatbots and image descriptions.

Programming Computers for Language Translation

The simplest translation approach might seem straightforward: replacing each word in a sentence with its corresponding translated word in another language. For instance, translating Spanish to English can be as simple as:

- Word Replacement: “Quiero” becomes “I want.”

While this method is easy to implement with a dictionary, it neglects grammar and context, leading to poor translations.

To improve accuracy, the next step is to incorporate language-specific rules. This might include translating common phrases as units and adjusting the order of nouns and adjectives. Early translation systems relied heavily on linguists creating complex rules to handle these intricacies, but this approach struggled with the nuances of real-world language.

Statistics over Grammar: A New Approach

As rule-based systems struggled, researchers shifted to statistical models. By using large datasets containing text translated between languages, known as parallel corpora, computers can learn to translate more effectively.

Thinking in Probabilities

Statistical translation does not seek a single exact translation; instead, it generates multiple potential translations and ranks them based on correctness probability, informed by training data.

Steps in Statistical Machine Translation:

- Chunking: Break the original sentence into smaller parts that can be easily translated.

- Finding Translations: For each chunk, identify potential translations derived from the training data. The frequency of translations provides a score for likelihood.

- Sentence Generation: Combine the chunks to produce various sentence structures and score them against a broad dataset of real-world sentences. The most human-like sentence becomes the final translation.

For example, if we attempt to translate “Quiero ir a la playa” (I want to go to the beach), the statistical model might generate several attempts, ultimately selecting the best one based on its training data.

A Major Breakthrough: Deep Learning for Translation

The real revolution in translation came in 2014 when researchers, including KyungHyun Cho, introduced a deep learning approach that requires significantly less human intervention. By employing recurrent neural networks (RNNs), they developed a system that could learn translation patterns based purely on data.

Recurrent Neural Networks

Unlike traditional neural networks, which are stateless, RNNs utilize previous inputs to influence successive outputs, making them ideal for processing sequences like text.

Encodings

Encodings reduce complex data (like sentences) to simple numerical representations. For translation, RNNs can process sentences one word at a time, producing an encoding that captures the entire meaning of a sentence.

The Translation Process

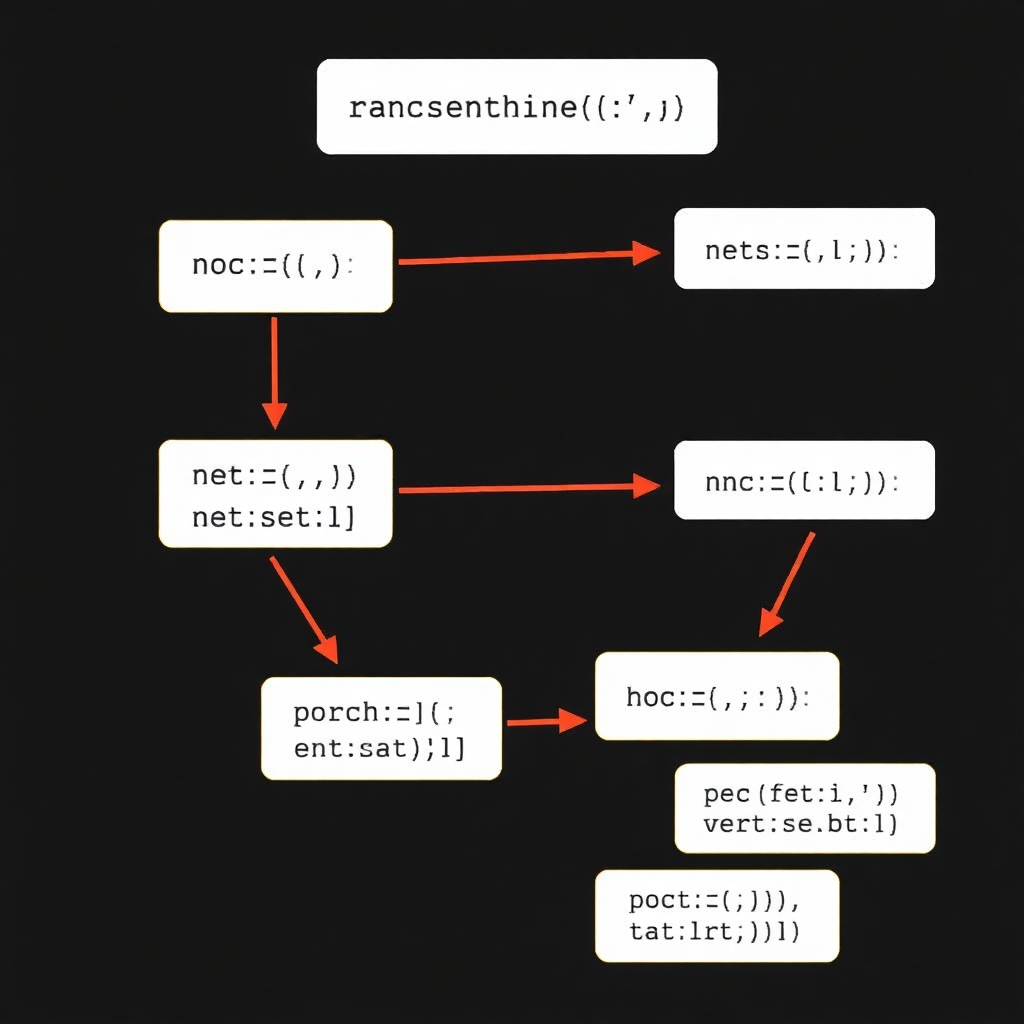

To implement translation using RNNs, two interconnected networks are created:

- The encoder RNN transforms the input sentence into a unique encoding.

- The decoder RNN takes this encoding and, instead of reconstructing the original sentence, generates an equivalent sentence in the target language.

This approach allows for the translation of sentences without predefined grammatical rules, relying solely on the model’s learned understanding of language structures.

The Potential of Sequence-to-Sequence Models

The versatility of sequence-to-sequence models extends far beyond language translation. They can be employed to create AI chatbots capable of handling tech support inquiries or managing human-like conversations based on vast datasets of dialogue exchanges.

Here’s a sample interaction between a user and an AI chatbot, demonstrating its conversational abilities:

Human: Why is my connection refused?

Machine: Can you tell me the version of your network connection software?

Human: I’m not sure.

Machine: Is the network connection asking for a user certificate?

Human: Yes.

Conclusion

The evolution of machine translation, propelled by deep learning and RNNs, showcases a paradigm shift from traditional methods reliant on grammar rules to more dynamic systems that learn from data. The advancements in this technology are paving the way for exciting applications across various domains, promising a future rich with possibilities.

If you have any other requests or need further elaboration, feel free to ask!